Can AI replace programmers? Here’s what happened when I put ChatGPT to the test.

Can AI code for us? Discover ChatGPT's strengths and pitfalls - and why human programmers still matter for real-world solutions.

Jan Vrtiska

1/19/20256 min read

I’ve read a lot of stories about companies replacing programmers with AI. I use AI (mainly ChatGPT) on a regular basis, but I never thought it could be as good as a developer with a few years of experience.

As a manager, I don’t have much time for programming, so when I wanted a small script to fetch data from our company’s Jira to calculate some statistics, I decided to give AI’s programming skills a try.

The expected output wasn’t some complex application with a database or a complex user system. The script should do three things:

Fetch data using the Jira REST API

Calculate some statistics

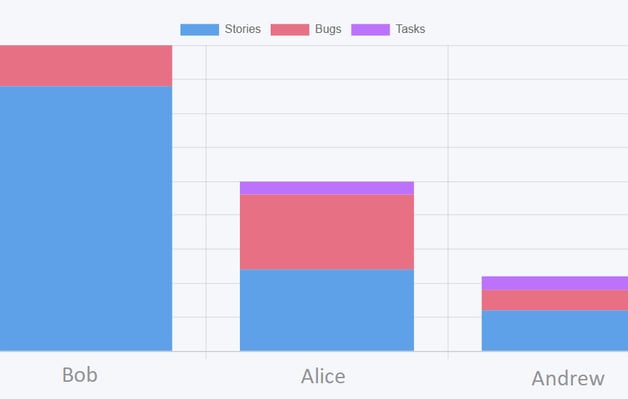

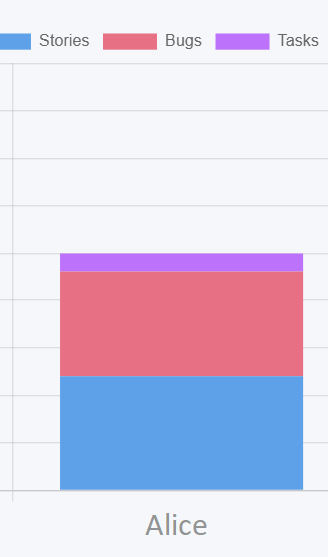

Generate an HTML page with charts and tables

Quick wins with basic prompts

Initially, I wasn’t entirely sure which statistics I needed, so I started with very basic calculations, like the amount of completed story points per sprint. My initial prompt was very simple, and after a few fixes (authorization and identification of the custom field where we store story points), I had a script that did exactly what I was expecting.

I’ve read a lot of stories about companies replacing programmers with AI. I use AI (mainly ChatGPT) on a regular basis, but I never thought it could be as good as a developer with a few years of experience.

The script was well-formatted, and even though I’m not a JavaScript programmer, I could understand what those 100 lines of code were doing. I was surprised and suddenly motivated to become a product owner and started brainstorming big ideas. 😀

Prompting was easy, and I managed to add a few more statistics and one chart. Occasionally, there was a syntax error, so I had to copy the error output and give it back to ChatGPT for a fix.

Unexpected roadblocks

1. Reaching AI’s response limits

Suddenly, I got a warning:

"You have 15 responses from o1-preview remaining. If you hit the limit, responses will switch to another model until it resets on November 19, 2024."

I didn’t know about the limit, so I was surprised and a bit upset that I would have to pause my “coding session.” Unfortunately, I reached the limit right at the moment there was an issue with one of the charts, and I couldn’t figure it out myself.

So I had to wait 6 days to continue.

When the limit was reset, I had a new set of features I wanted to implement, so I wrote a long prompt full of instructions on what to fix, what to add, and how it should be visualized on the HTML page.

2. Waiting on ChatGPT to generate large scripts

o1-preview took more than 1 minute and 30 seconds of “thinking” before it started writing the script. The script really grew in terms of lines, and suddenly I had a script of over 1,000 lines. That introduced another issue: it took ChatGPT quite a while just to write down the new version of the script. It’s interesting to watch ChatGPT “type” the code (almost like a human responding), but watching the screen for more than a minute while it renders is not ideal. I had to wait and just watch it.

Eventually, I ended up multitasking, because my “coding session” with AI was 10% checking the output, 5% writing the prompt, and 85% just waiting for ChatGPT to give me a new version of the script. I really wish it could append or fix parts of the code without having to regenerate everything. Human programmers don’t rewrite the entire codebase when they add a new feature, so I wish ChatGPT would be more “human” in that regard (even though I know it works differently than the human brain).

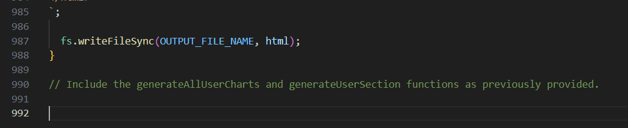

3. The problem with skipped code

As my script continued to grow, I found that ChatGPT would skip some parts of the code and just give me instructions like:

“Include the generateAllUserCharts and generateUsersSection functions as previously provided.”

That would be fine if I always knew exactly which functions it skipped. Instead, I ended up with a script that didn’t work. I had to investigate the differences between the latest version of the script and the previous one. It’s possible, but it’s not something you want to do multiple times in an hour.

So I started adding lines to my prompts, such as:

“Give me the full version of the script.”

But even direct instructions like that didn’t always work on the first try. I often had to repeat the instruction, and usually got the full script on the second or third attempt.

4. Burning through message limits

Remember that message limit I discovered earlier? Well, with this skipping issue, I burned through more messages because I’d repeat the same request multiple times before I got what I asked for…

I hit the limit multiple times, so it took a while before I finally got the result I’d brainstormed. The script was almost 2,500 lines long. It was almost perfect, but there were still bugs, and the biggest issue was: that even though I tried multiple times to explain what was wrong (some charts were not showing data for selected users but for someone else), I just couldn’t get ChatGPT to fix it. Several times, it fixed one bug but introduced a new one.

Combine that with the message limit, plus the time to render the script, plus the time to convince ChatGPT to give me the full version—and you can imagine how frustrating this got.

Experimenting with multi-file structures

I came up with what I thought was a “brilliant idea”: every big application consists of multiple files. If I split my script into multiple files, we could save time with rendering and avoid having to convince it to give me the full version. So I instructed ChatGPT to split the script into multiple files based on logical structure (fetching data, calculating the statistics, and generating the HTML visualization). I was surprised by how well ChatGPT interpreted my instructions and even suggested more granular separation. I ended up with five files with a logical structure.

Everything seemed great—until I realized that the total lines across these five files were significantly fewer than in the original script. My worries were confirmed when I ran it and saw that some of the charts and tables had disappeared. ChatGPT had basically forgotten parts of the original instructions. I’d probably hit the context window limit, and it started ignoring my earlier details.

Starting from scratch with consolidated prompts

I went back to the chat and modified one of the prompts. My plan was to ask ChatGPT to give me a single prompt as output containing all the instructions I’d given so that I could start over in a new chat, hopefully capturing everything. By that time, the new model (o1) was finally released and had new features like canvas. I got a pretty long prompt that seemed to include all my instructions, so I copied it and started a new chat.

Unfortunately, the output script was nowhere near what I hoped for. I would have to basically start from scratch to get a usable result.

Are there better options for AI coding?

You might know of other AI models or tools that would be more suitable for this task. Since I was working with company data, I wanted to use ChatGPT Enterprise, which I’m allowed to use on company systems. I anonymized my prompt a bit and tried it using a paid Claude subscription as well, but I ran into very similar issues. First, it took multiple interactions before the script started looking promising; then, once again, I hit the output limit and got a truncated result.

Final thoughts: AI as a supplement, not a substitute

With ChatGPT (and likely other GPT models), you can quickly create a draft of a simple application. I was really impressed by how quickly I got the first version of the working script. But I can’t imagine using this approach for something more complex or for a final product that’s supposed to be bug-free.

I know programming, so some steps weren’t hard for me. But if you didn’t know how to code at all, it would be much tougher to rely on AI as your only developer. Programming isn’t only about writing code; it’s also about compilation, source code versioning, and deployment—and for all of these, you’d either have to learn them or discuss them with AI and hope you get good guidance.

I think everyone should experiment with AI to discover its current limits and see how to leverage its capabilities. But for now, you’d still be faster hiring a real programmer who might be slower for the first version of an application but can later quickly adjust and fix bugs.